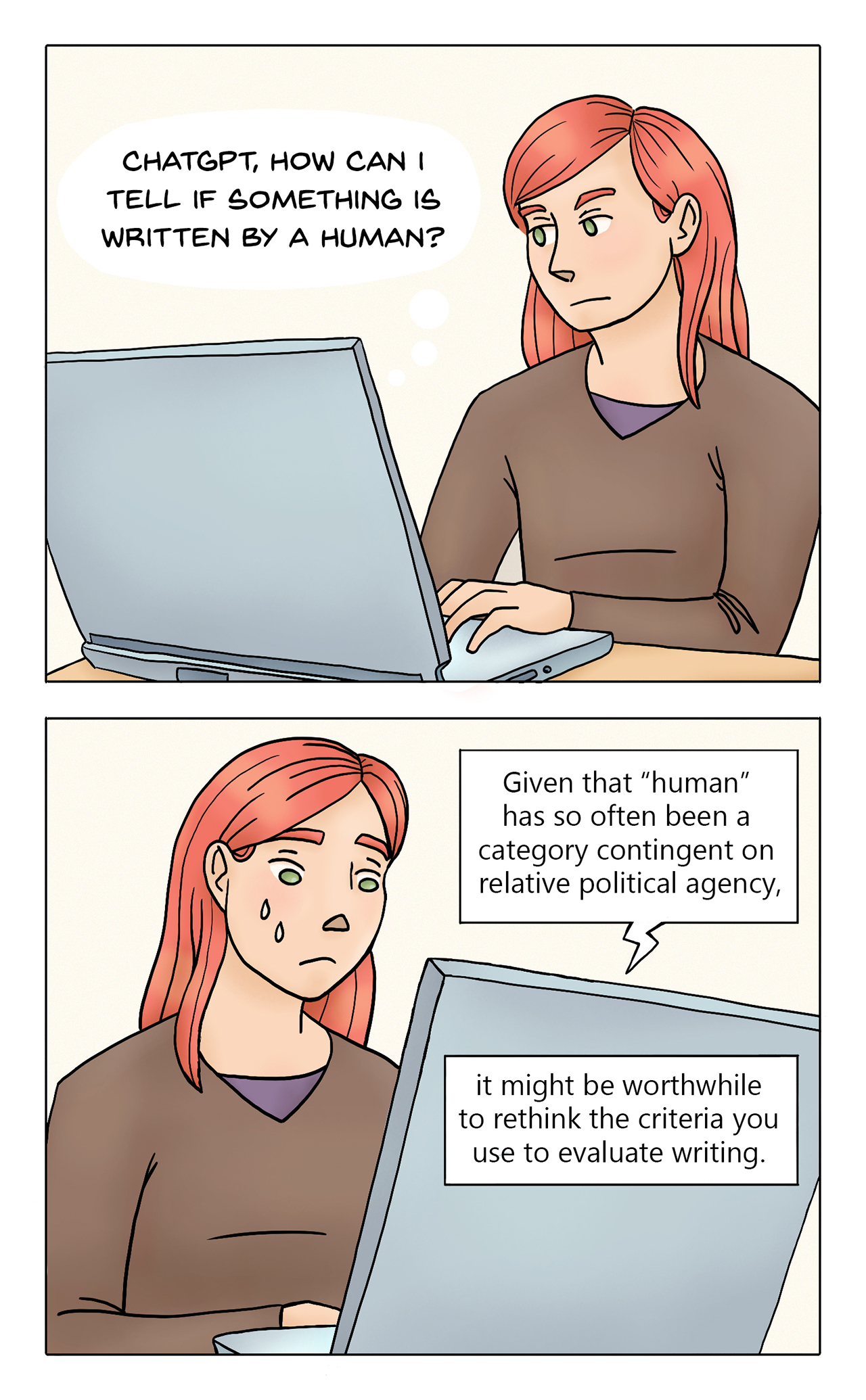

Out of curiosity, I started experimenting with ChatGPT this semester. There’s not much to say about it, save that it generates stale and flavorless writing that’s easy to recognize once you know what it looks like.

Unfortunately, now that I can recognize text generated by ChatGPT, it’s hard not to see it. It’s also hard not to get my feelings hurt when my students submit work written by ChatGPT. Why would they do me dirty like that?

So I’m not saying that I like ChatGPT. I actually kind of hate it.

Still, the potential for this sort of writing engine is incredible. What if it could work not just as an actually functional grammar checker, but also as a translator between different ways of self-expression? Wouldn’t it be interesting if the model could be developed to “translate” an outline or quickly written sketch of an idea into a piece of writing that was more easily understandable by a broader audience? Wouldn’t it be nice if people who felt embarrassed or otherwise unable to express themselves had a means of putting their thoughts on paper?

I understand how unrealistic it is to think that ChatGPT won’t be abused by bad-faith actors, and I also understand that there’s no point of people in marginalized positions having a voice if the venues where they could be heard are shut down due to AI-generated content spamming. At the same time, I think it’s probably healthy to keep an open mind and be as inclusive as possible when defining who (and what) counts as “human.”